The wind of change - change data capture in data factory

- Chen Hirsh

- Feb 10, 2023

- 3 min read

The “Holy Grail” of data engineering is probably the ability to synchronize two data stores, in as close to real time, with little or no work. It means we automatically detect changes (i.e insert, updates, deletes) on the source system, and apply them on the target system.

Microsoft recently added a new feature to Azure data factory, called “Changed data capture” (from now I’ll call them ADF and CDC, for short), that supposed to help us do just that. Let’s see how it works and if we really found the “Holy Grail”.

Please note that this feature in ADF is still in preview and may change in the future without warning.

Also note that there are a couple of options to use CDC in ADF. I will demonstrate using a table with an incremented time field. There is also an option to use an external system CDC feature (like SQL server) as the source. Maybe I’ll blog about that in the future.

We will set up sync from a table in Azure SQL database to a directory of Parquet files on Azure data lake storage.

Prerequisites:

You should have the following services set up:

Azure SQL database

Azure data factory

Azure data lake storage account

I created a demo table in Azure SQL database.

Here is the code to create the table and load it with data continuously:

CREATE TABLE dbo.dripply (

row_id INT IDENTITY,

insert_time DATETIME DEFAULT GETDATE()

)

--run to add new rows every 5 seconds for an hour

DECLARE @end_time DATETIME = dateadd(hour,1,getdate())

WHILE GETDATE()<@end_time

BEGIN

INSERT INTO dbo.dripply DEFAULT Values

WAITFOR DELAY '00:00:05'

CONTINUE

endBefore we dive in, on overview of how the solution works:

On our SQL table, every time a row changes, the update time field is updates to current time.

Data factory “remembers” the highest time from the previous run, and query SQL for all rows with time greater than that value.

Let’s go to ADF, and start creating the process.

A new folder was introduced recently under Factory Resources, called Change data capture (preview).

On that folder click the 3 dots sign and then click on “New mapping (preview)”

On the first screen, we need to define the source.

Choose your linked service or create one. Your options are CosmosDB, SQL server, or files source.

Choose the table\s you want to sync and Click continue.

On the target screen, choose your target type and a linked service. In my case I will be writing to Parquet files, so I will need to choose a container on Azure data lake to store the files.

A new mapping object is created. You can edit the field mapping, and add more tables. After creation, the mapping job is not started. To start the mapping run, you need to first publish it, and click start.

Now to monitor the CDC mapping, we can go to the monitor part of ADF, and click on change data capture, and then on our newly created mapping.

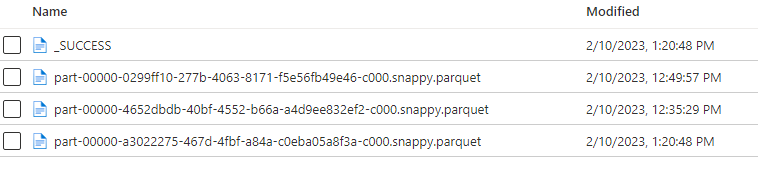

Checking the Azure data lake folder, the data has been written to the target folder.

Each run creates a new file.

I have noticed the following things you need to pay attention to:

You need to have data in your table prior to starting the mapping in ADF. Otherwise CDC gave an error: “Upper bound checkpoint could not be fetched” and I could not make it work. I had to delete and restart the mapping.

It seems that the first run only sets the checkpoint and does not bring any data. Rows will start coming only on the second run (after 15 minutes, or whatever you choose)

Deletes are not synced to the target, because ADF only take changes occurring to the update time field (insert and update). You need to implement another way to sync deletes.

ADF will bring up a cluster to process your data every time it works (depends on what you choose in latency). So, there will be a bill to pay.

One last thing, If you click on the latency button, you can choose how often data will be updated. But also, there a gray option for “Real-time”. Looks like a promo to a very cool feature. I hope it will be release and I can blog about it in the future.

Hi Chen Hirsh, good day to you on points you mentioned under "I have noticed the following things you need to pay attention to:" According to my recent experience with ADF CDC resource, here is my findings. 1. This issue has been fixed. 2. One can do initial load of the data this feature will be available soon. 3.Incremental extraction doesn't capture complete information about the changes made to a particular row. To capture all DML operations and obtain comprehensive change data, one can leverage the native CDC (Change Data Capture) feature available in SQL databases.